29 Jul

In all our online spaces, especially those where children are present, it’s important to be vigilant in monitoring for suspicious behavior and preventing online abusers and groomers from doing harm. Detecting grooming in its early stages is critical to ensuring a safe environment, and if missed can put children at risk of abuse both onscreen and in real life. It’s an issue that continues to be a challenge for online games and social platforms.

Issues around Detecting Online Grooming

Grooming behaviors can be difficult to spot – groomers often do their best to fly under the radar, sometimes spending months building trust with their targets before attempting to escalate their behavior. For obvious reasons, it’s not enough to hope that victims, especially young children, will file a report to identify potential groomers, so it’s essential to proactively monitor for suspicious behavior.

Because of these factors, identifying potential groomers can pose a huge challenge for trust and safety teams. On top of that, even if a suspect is flagged appropriately, it can take a moderator days of work to comb through chat logs and other evidence to conduct a thorough investigation. We designed GGWP’s grooming alerts feature to address both the need to identify potential groomers sooner and make it easier to conduct thorough and efficient investigations.

Detecting and Preventing Online Child Grooming Behaviors

With more common forms of online abuse and toxicity, we can usually consider each case in terms of isolated incidents, for example when a player targets another player with verbal abuse during a competitive match.

Grooming, on the other hand, usually can’t be detected by looking at a single, standalone incident. The signs of grooming can be subtle, and while messages can have indications of grooming, they rarely justify a case in isolation, especially compared to more blatant forms of abuse. But when we zoom out and take into account a user’s history over a period of time, our models can more confidently predict whether or not a user is engaging in predatory behavior.

How GGWP Works to Detect Grooming Behaviors

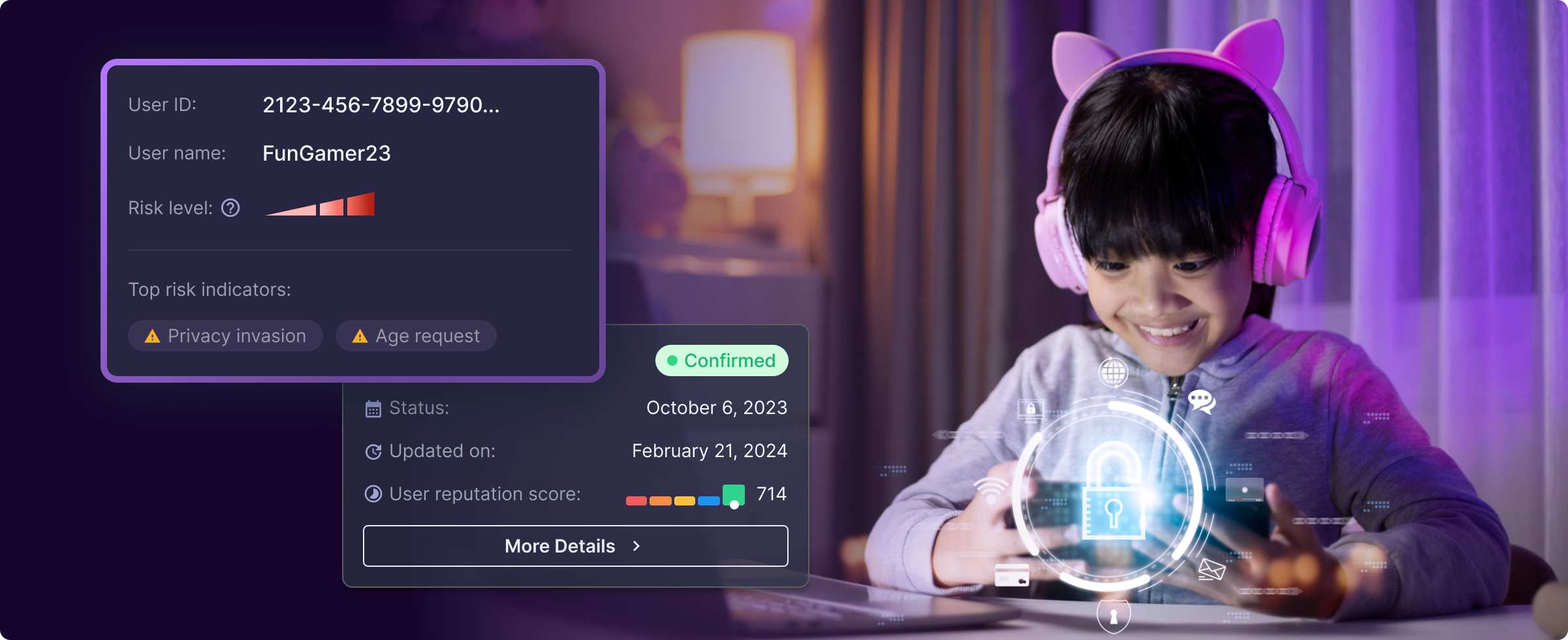

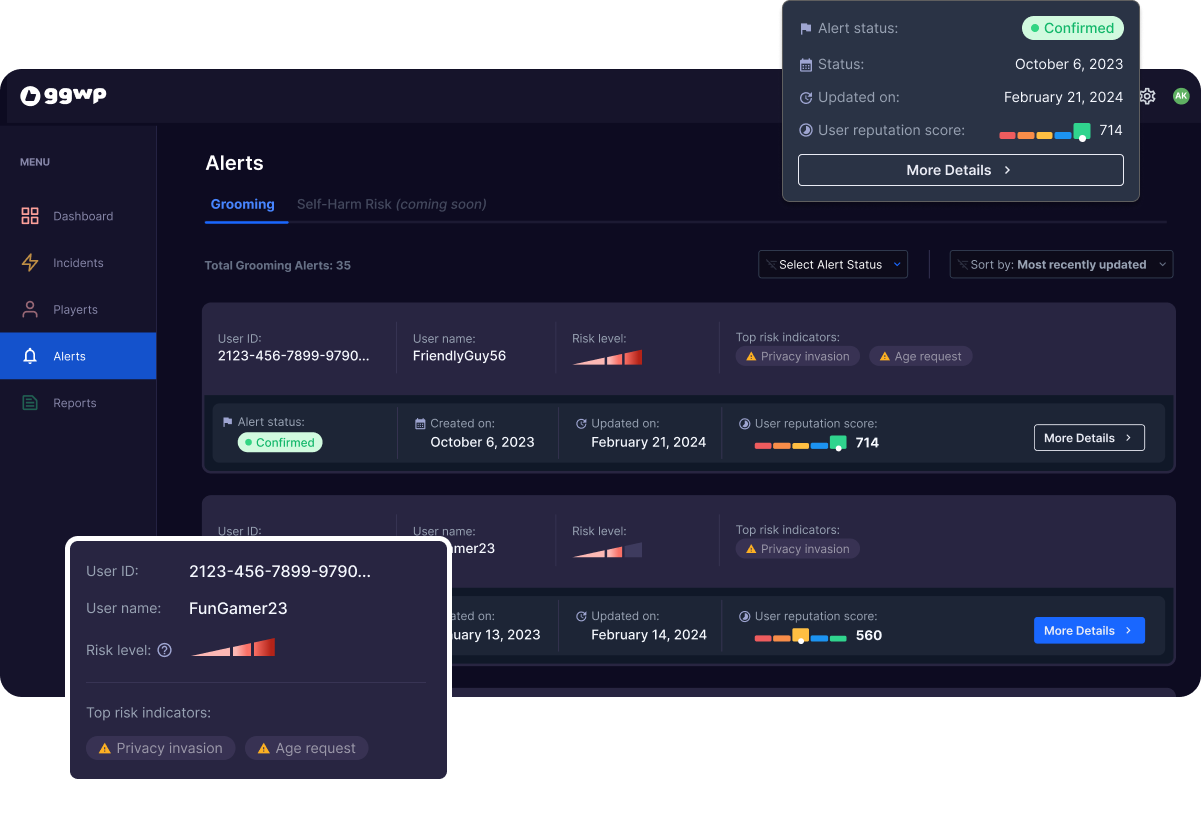

To do this, we monitor for a variety of indicators, such as age requests, flirtatious behavior, sexual content, asking to keep secrets, requests to meet or take conversations off platform, and more. Then, looking at user history, we assign a risk score to each user and flag users for review based on their risk level. This way, T&S teams have visibility into not only who is engaging in suspicious behavior, but who poses the highest risk such that moderators can triage appropriately.

Once a suspect is identified, it’s crucial that moderators have the information and tools they need to conduct an efficient and thorough investigation. To help moderators comb through a user’s history (which can span several months), we flag messages we think are suspicious and tag them with the corresponding indicator. Moderators can also review each suspicious message in the context of the chat, to give a clearer picture of the conversations between the suspect and potential victims.

When conducting grooming investigations, moderators are often looking for multiple pieces of evidence, such as the offender knowing or having reason to believe the target is a minor, along with inappropriate behavior like sexually explicit messages or requests for personal information. To help gather and organize this evidence, we also include the ability to pin specific messages, so key evidence can be pulled up quickly during an investigation.

Preventing online grooming can be challenging without the right tools and policies in place, and it’s our goal to make it as easy as possible for teams to detect the early signs and prevent harm to their communities. Contact us to learn more about how GGWP can help keep your community safe.