24 Oct

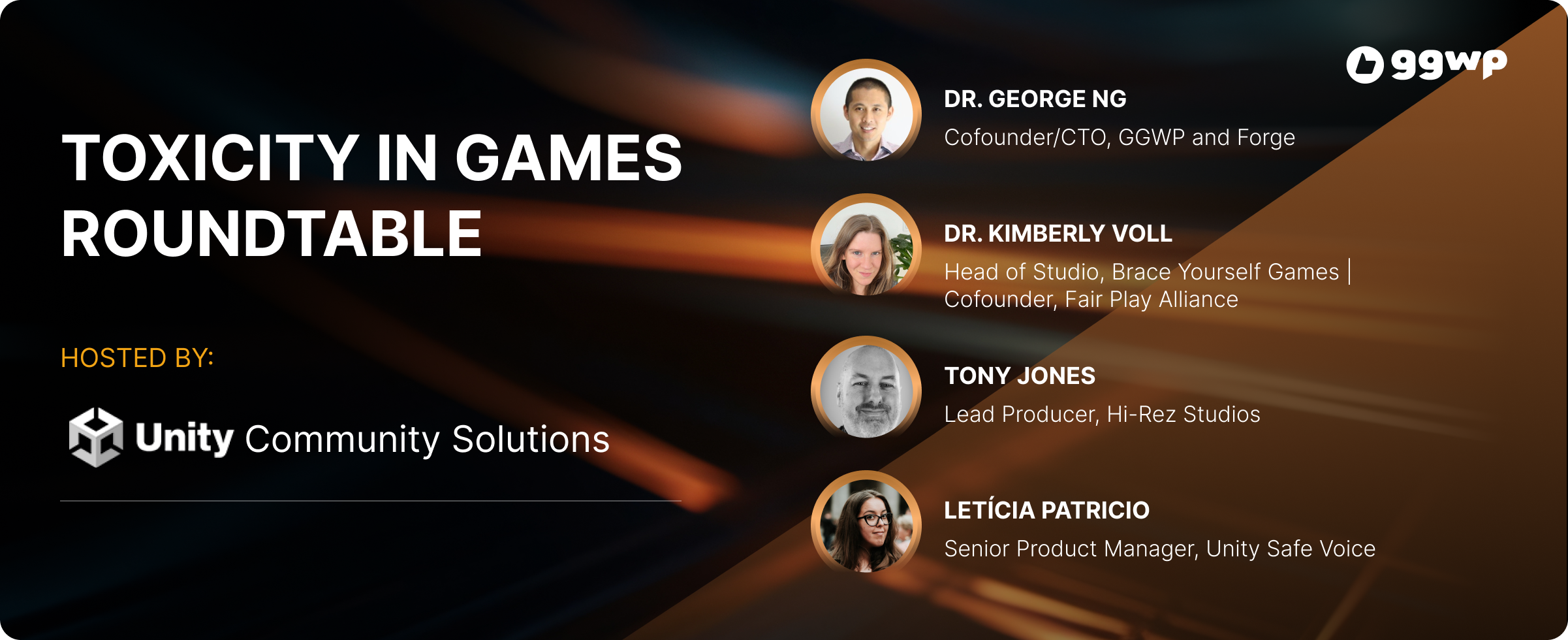

Unity Toxicity in Games Roundtable

On October 19, our CTO Dr George Ng joined Dr. Kimberly Voll, Tony Jones, and Leticia Patricio for a roundtable discussion on Toxicity in Gaming hosted by Unity Community Solutions. We enjoyed a lively discussion on the current state of toxicity in tech and ways that technology can be used to solve the problem, joined by >1300 attendees

We know many developers in our community, large and small, are working on their anti-toxicity program (confirmed by the >1300 re. For those who couldn’t make it to the event, we wanted to share a few highlights of the discussion that could help as you design and implement your anti-toxicity programs.

The game toxicity problem continues to grow

According to Unity’s 2023 Toxicity in Games Multiplayer Report, toxic behavior in online gaming communities continues to be a major issue, with 74% of players reporting increasing harassment, and 67% of players saying they are likely to stop playing a game after toxic experiences.

This won’t surprise most people reading this post, since attention to toxicity has been on the rise as well. This year, 53% of developers noticed an uptick in toxic behavior and 96% of players reported taking action against toxicity (up from 66% in 2021).

There are several reasons for this increase: people are playing games more, our collective tolerance for harassment and disruptive behavior has been going down, and AI has also increased our ability to detect it. As the scale of the problem and the bar for addressing it continue to grow, many multiplayer developers feel overwhelmed.

A few highlights of the strategies developers can use include the following

To get started, examine root causes in your game and start small

In general, toxicity arises from both individual behaviors and systemic issues and it’s essential for game developers to address both. On an individual level, few players intend to be harmful when they start to play, but can be provoked by game dynamics that amplify frustration. While devs can use sanctions to punish players, these have to be part of a bigger effort with setting standards and communicating them to the community to be effective.

Systemically, certain game design elements can inadvertently enable toxic conduct by introducing unnecessary competition or lack of clarity around rules. And it’s essential to remember that humans are social and we adjust our behavior based on the norms we observe – so setting norms of behavior and proactively including positive representation of marginalized groups is essential.

You don’t have to tackle everything at once. A few approaches include:

- Surveying your players – current and recently churned – about what bothers them most

- Analyzing the biggest behaviors that precede churn in your game

- Looking at whatever data you have (e.g. player reports) to identify your biggest issues

- Observe what players are talking about

Your anti-toxicity program will continue for as long as your game does, and you will need to iterate – so start from your biggest pain points, knowing you will improve over time as you get feedback from your community.

Operationalize your values and prepare to iterate

Every game is different: everyone wants a positive community, but getting a level deeper reveals that those words mean different things to different people. What do you consider fair game vs no-go behavior? Instead of proposing blanket solutions for every game, the panelists focused on frameworks and iterative processes, including:

- Defining values early and being concrete about them rather than vague (everyone wants a positive community, but what does that mean to you?)

- Starting with a code of conduct aligned to values

- Performing ongoing risk audits with your own user data and feedback

- Building a passionate team and celebrating their effort

- Involving the community in changes.

Since players are creative and game and social dynamics change, you will inevitably go through an evergreen process of monitoring and responding to evolving community norms. Moderation is not a one time investment but an ongoing project throughout the game’s lifecycle.

Prioritize this investment – the business case is there

The spirited discussion reinforced that managing online toxicity is a necessity for multiplayer games, especially with the increase in toxic UGC content that Gen AI can produce. Our panelists uniformly emphasized that the churn cost of toxicity is so high (GGWP estimates games can experience 20% additional attrition due to toxicity), investing in moderation is a smart business decision, not just a nice thing to do.

At the same time, creating social infrastructure that enables healthy player experiences is both an opportunity and responsibility for today’s game developers. Your moderation program should work hand in hand with game design and strategic choices about representation: if you want to avoid toxic experiences for marginalized groups, positive and proactive representation of these groups to set the right social standard is essential.

Employ tech selectively with the dual approach

The past few months have been a very dynamic time in all of gaming with AI becoming more widely used across domains, and trust & safety is no exception. There is a range of views on where technology should fit. Some games have seen community backlash from overly automated systems, which can be prevented with thoughtful communication and implementation of the systems.

Our consensus was that AI should enable human moderators, not replace them entirely. The problem is so far beyond human scale that AI has a crucial role in moderation, but human moderators will continue to be needed for complex judgment calls. George emphasized that AI has the benefit of scale and that “there are nuanced areas where we need humans, so it will be a complementary system.”

Our approach at GGWP has been to combine AI based triage and detection with game context and comprehensive player reputations to reduce moderation volume and ensure that humans weigh in where their expertise is needed. One of the benefits of that is that humans can now also take the time they need to make those tough calls, while keeping the community safe and taking lightning-fast action in the majority of cases, where the system’s confidence in taking the action is high.

To learn more about GGWP’s AI-based moderation solutions, contact us.